According to the report published by The Economist in 2017, data has surpassed oil as the most expensive commodity on the planet. Data has stepped up as the new commodity in this fast-growing economy. Moreover, data science has become one of the most promising and rapidly growing industries globally. The need for data scientists and engineers has increased dramatically.

The field of Data Engineering is linked with data processing and analysis tasks. The aim is to sort and store relevant data from various sources. Processing and converting data packets into clean data further give rise to processes like Data visualisation, Data Science solutions, Business Analytics, etc.

The earliest proof of data analytics use is depicted through the public policies for better sanitisation for the British soldiers. - datacamp

Data Science has gained more popularity and implication due to Data engineering. This field is the sole reason we don't need to spend hours/days analysing data to solve complex business issues. Hence, we can say that this field requires an intensive understanding of the latest technologies, elements, and quick execution of vast data packets with efficiency.

This blog explores a few of the must-know concepts in data engineering.

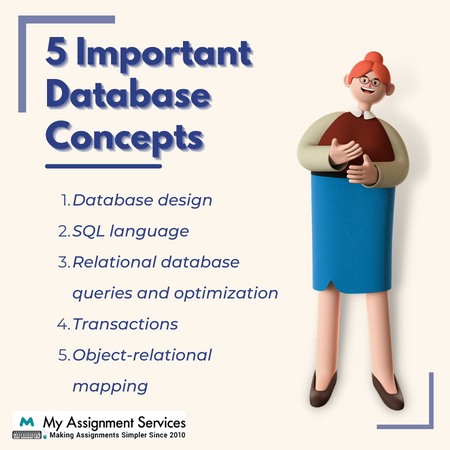

What are some Basic Database Concepts?

The primary aim of Data Engineering is to offer an organised, ideal data flow that further enables data-oriented models - ML models and analysis. The need for data flow is in various organisations and departments. A data pipeline is created to achieve an ideal data flow. Such systems have independent programs which make several programs run on the database execute smoothly. Here are a few of the concepts that every data engineer must know:

1. Relational Model

It is a data organisation model where data is distributed into tables. The table in this model has a predefined structure for the database called a scheme. Only the data points abiding by the schema can be added to the table. The original basis of the databases and the tables are called relations. Hence it is called a relation model.

2. Data Normalisation

The procedure for removing data redundancy is known as data normalisation. This process is important to lower redundancy as much as possible, simplify data structure, improve data integrity, and find errors. It is an important database concept.

3. Primary and Foreign Keys

There are countless tables that data engineers have to go through when processing/analysing a dataset. Different keys help the moving through or identifying different elements in a table. The primary key helps locate unique elements. Whereas a cell containing reference from another row/column is located through a foreign key.

4. Indexes

Finding a subset that satisfies a specific criterion can be challenging for a huge table with millions of data points. This process is sped up by building an index for the searched cell. An index stores the specific locations for each column value in the table.

5. Transactions

In data engineering, the term transaction means an invisible work unit. A transaction is a process used in data engineering for multiple data points. The executor wishes for all the steps involved in the transaction to succeed or fail. An epitome is a bank transaction, where any error will fail the transaction.

6. Replication

As the name suggests, the process of copying data from one source to another is called replication. Also, synchronising various nodes to a server is done to secure the data and keep an original copy of the same.

7. Sharding

The process of splitting the data in a table into different key parts into different nodes is called Sharding. This process divides a table into various categorised partitions (horizontally). One node can hold more than one shard that fits the logical requirements.

Over 80 per cent of Australian corporations have been using big data analytics for data management in the recent 12 months. - International Data Corporation

These are all the important (as stated-above must-know) basic database concepts that you must know to master all the processes of data mining and analysis. In hindsight, engineers don't necessarily need this knowledge in their regular work processes. Still, data processing is an important part of every business; the huge amounts of data are the exact reason for the need to know such basics.

Technical writing can be challenging, and the technical lexicons and the structuring are difficult to grasp. You might face issues finding adequate resource material and need someone to do the final proofreading. Fill out the enrollment form to get engineering assignment help from experienced academic writers at affordable prices.

What are the Different Components of a Database System?

The definition of a database system is that it is a collection of various data systems. Such systems offer data manipulation and extensive cloud storage. It makes the process of data management easy. A textbook example of a database is a phone directory or even the databases of social platforms like Facebook. So here are the different components of a database:

Hardware

The physical attributes of any electronic device - mouse, keyboard, webcam, etc - is called the hardware. It is a tangible interface that connects the digital system to the real physical world.

Software

The different sets of programs used for managing and controlling the tasks/actions to control the entire database are called software. The operating system, database and network software are a few examples.

Data

Unorganised facts that need to be processed and organised are called data. The database concepts are used for analysing, processing and replicating the data. It includes - observation, facts, perceptions, symbols, images, etc.

Procedure

It is clear from the word that the procedure is the set of steps that need to be followed/completed to reach a pre-defined outcome. For data processing, there are several procedures like collection, input, processing, and output.

Database Access Language

The language used for accessing the data from a database or entering new data is called Database Access language. It is also required to update or retrieve data from the DBMS.

Did you know that there are nearly 40,000 bytes of data per sand grain on Earth?

Here are the basic elements of the DBMS (Database Management System). The basic elements are something that you should be familiar with to work on a database. These elements are the ones that ensure that each common gives the desired result. Any error on any of these elements will lead to issues in the data processing.

What are the Advantages of a DBMS?

A collection of different programs that makes it possible for the user to access a database, process the data, and report the results is a DBMS or Database Management System. It is a concept that was developed in the 1960s and since has developed manifolds. There are several advantages of a DBMS:

- It offers a range of processes that are useful for storing and retrieving data

- Serves as an efficient data handler that can balance multiple applications based on the same dataset

- Provides a uniform administration procedure

- Has various programs that make the process of data retrieving and analysis effective

- Ensure data integrity and security

- Reduces the time for developing an application.

Face detection in tech devices is a prominent example of supervised machine learning. Electronic devices can detect, capture, save, and analyse a person's facial identity or features to unlock the device with this technology. The user must input their data into the algorithm to let the device recognise them.

Hopefully, you got the idea of basic database concepts and their usage in data engineering in the apparent world circumstances. The field of data engineering is especially suitable for those with a deep-rooted interest in computer science and information technology. You must know all these concepts by heart for effective data mining.

Transforming a high school paper into a university-level assignment requires a lot of effort, especially for students who are in their university life. Need assignment help from experienced academic writers? Fill out the enrolment form to get assignment help at pocket-friendly prices.